AI Agents: Using DevOps Metrics with MCP

LLM Beyond Code Generation

LLMs are well known for their code generation capabilities, and the same goes for DevOps-related code (IaC, Pipelines, ...).

However, LLMs—and in the case of this post, AI Agents—also give an opportunity to integrate DevOps processes and LLMs to enhance quality and release management.

DevOps Data

DevOps practices span across multiple domains:

- Packaging

- Security

- Release

- Security

- Tests & Quality

- Operation

And as DevOps implementation calls for the automation of those practices, it's a unique opportunity to be able to collect data for those steps of the software lifecycle.

These metrics are usually used by the organization to inform decisions about release and quality. But because systems are complex and because there is usually more to the release process than one automation can provide, AI Agents can be used to synthesize those data and help with those decisions.

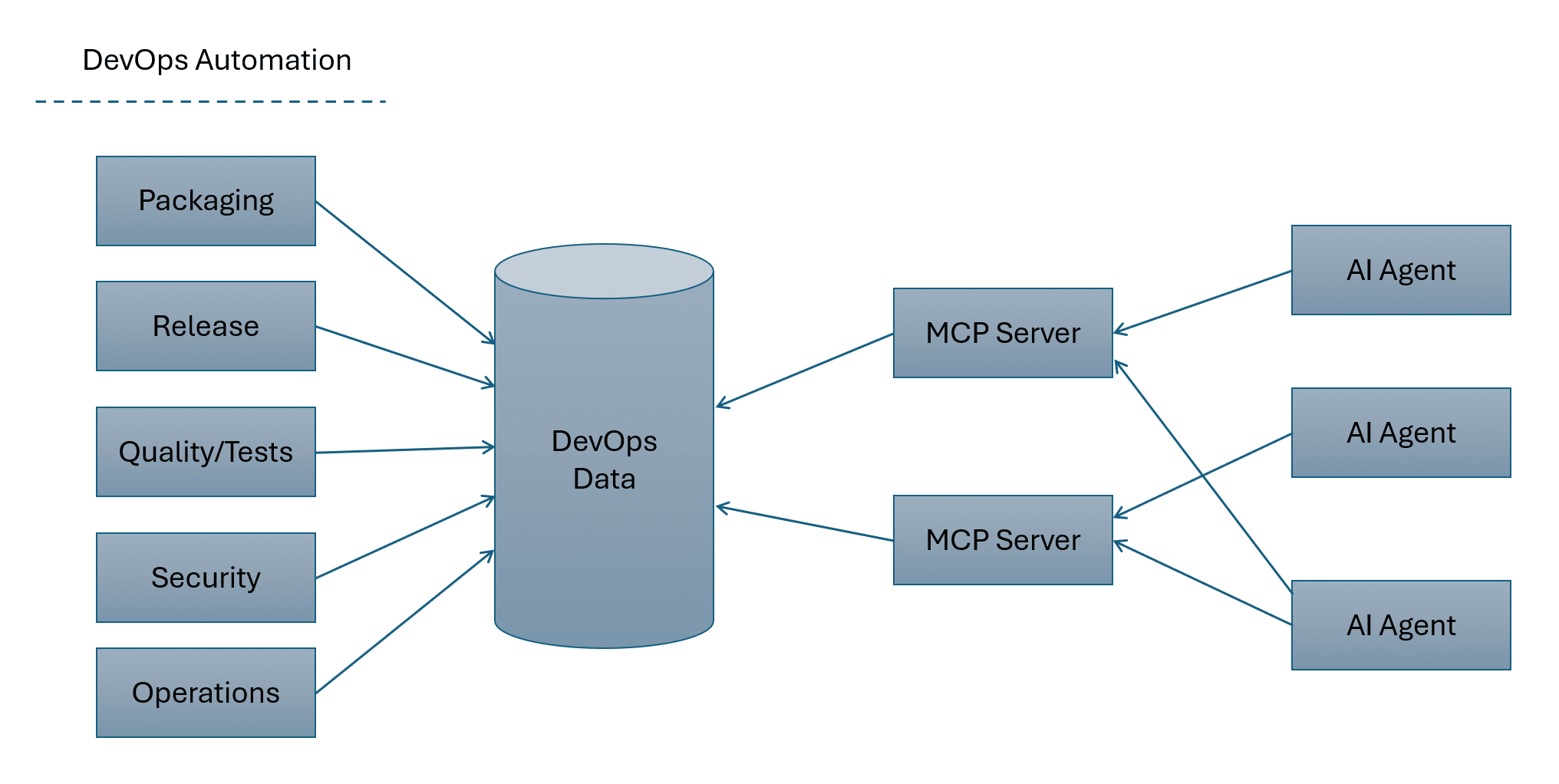

Use Case of Using MCP to Process Data

MCP ("Model Context Protocol") is used to expose some data and capabilities to AI Agents.

AI Agents in turn can also use the capability of LLMs to collect and process (and act) on that information.

In this use case, we are going to consider the following setup:

And in the code example, we are going to use

Mastrafor the AI Agent (https://mastra.ai)MCP-Frameworkfor a simple MCP server. This server will mock data that can usually be collected from a CI/CD process. (https://mcp-framework.com)

Examples Of Definition

For the MCP Server, let's define 2 "Tools":

Tool 1: Getting the latest deployments. This would return information like:

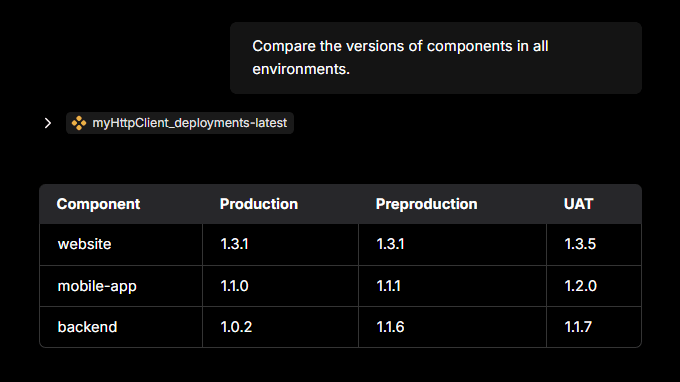

[

{ component: "website", environment: "production", version: "1.3.1", dateDeployed: "2025-05-01T02:13:36.477Z" },

{ component: "mobile-app", environment: "production", version: "1.1.0", dateDeployed: "2025-05-20T02:13:36.477Z" },

{ component: "backend", environment: "production", version: "1.0.2", dateDeployed: "2025-05-15T02:13:36.477Z" },

{ component: "website", environment: "preproduction", version: "1.3.1", dateDeployed: "2025-05-20T02:13:36.477Z" },

{ component: "mobile-app", environment: "preproduction", version: "1.1.1", dateDeployed: "2025-05-22T02:13:36.477Z" },

{ component: "backend", environment: "preproduction", version: "1.1.6", dateDeployed: "2025-05-19T02:13:36.477Z" },

{ component: "website", environment: "uat", version: "1.3.5", dateDeployed: "2025-06-01T02:13:36.477Z" },

{ component: "mobile-app", environment: "uat", version: "1.2.0", dateDeployed: "2025-06-02T02:13:36.477Z" },

{ component: "backend", environment: "uat", version: "1.1.7", dateDeployed: "2025-06-03T02:13:36.477Z" },

]

Tool 2: Getting the latest test results:

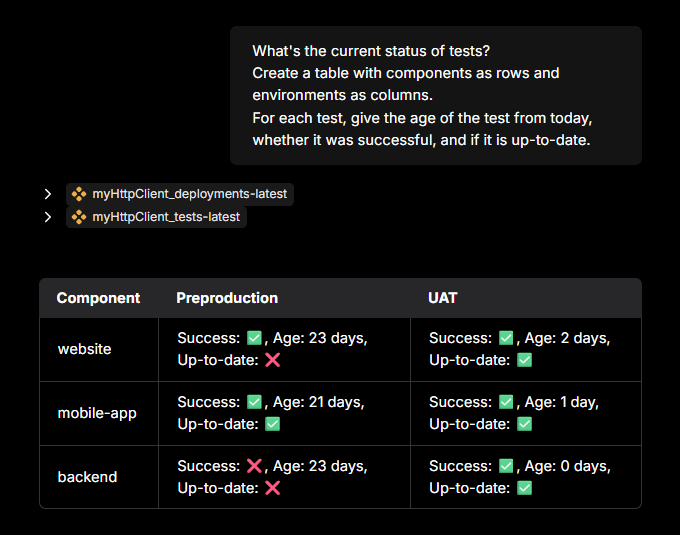

[

{ component: "website", environment: "preproduction", success: true, dateTested: "2025-05-19T02:13:36.477Z" },

{ component: "mobile-app", environment: "preproduction", success: true, dateTested: "2025-05-22T03:13:36.477Z" },

{ component: "backend", environment: "preproduction", success: false, dateTested: "2025-05-19T03:13:36.477Z" },

{ component: "website", environment: "uat", success: true, dateTested: "2025-06-01T03:13:36.477Z" },

{ component: "mobile-app", environment: "uat", success: true, dateTested: "2025-06-02T03:13:36.477Z" },

{ component: "backend", environment: "uat", success: true, dateTested: "2025-06-03T03:13:36.477Z" },

]

The AI Agent will be defined as follows:

You are a release management agent. You check the quality of the current application environment.

Your primary function is to help users get a view of the environment. When you respond:

- Correlate data from the deployments and test tools when necessary

- Consider that tests are done only in preproduction and uat environments

- A test is outdated if it is before the deployment time

- Versions are promoted from uat to preproduction and from preproduction to production

- A version is ready to be promoted to production if

- It has successful tests in preproduction

- Its tests are not outdated in preproduction

- Prefer to format responses as tables

Use the myHttpClient_deployments-latest to fetch current deployment status data.

Use the myHttpClient_tests-latest to fetch current status of tests.

Try to correlate the data from both tools to provide a comprehensive view of the environment.

This definition is then available to test in Mastra's playground interface:

Results and Example

Now that the AI Agent can retrieve the DevOps metrics, it can access and process the information about the system.

What are the versions deployed in production?

What are all the versions across all environments?

What is the lead time between preprod and production?

What is the status of tests for all the components?

Based on the current tests and deployments, which version is now ready or not to be deployed in production?

Going Further

There is a real benefit for organizations to collect metrics from all the software lifecycle steps. Those metrics can be used as part of on-demand reporting but also as input for Agents. MCP servers are a good way to expose those data to LLMs without coupling those systems.

In the example above, we used the interactive playground from Mastra AI Agent. Those agents are however able to be run as services and can be integrated as part of the software lifecycle. Some of the examples used above integrate with DevOps metrics to help decision making or even troubleshooting. However, those MCP integrations are also able to trigger actions based on the workflows and queries executed by these agents. This can also be a powerful addition to the development and release lifecycle.